NEWS

Our application called Senbay is now available for iOS !

http://www.senbay.info

Senbay is a video recording application which includes various sensor data stream (acceleration, gryo-sensor, geolocation, and more) as an animated QR code.

Featured Work

EverCopter with SENSeTREAM (2015)

Japan still has big risks for earthquake and tsunami. Since big disasters have possibility to damage communication infrastructure on the ground, the alternative communication way among citizen and devices is necessary. We propose a novel disaster monitoring system by cooperating satellite and drone technology. When a big disaster is occurred, quasi-zenith satellites broadcast an urgent message to the drones. Then, drones fly automatically to monitor real-time ground situation by using their camera and sensors. In addition, to realize continuous monitoring from the sky, we developed special drones called EverCopter, which can fly very long time by supplying electricity via power cable. Finally, monitoring information such as video, location, or various environmental data is stored or broadcasted by using SENSeTREAM technology. SENSeTREAM can integrate sensor data into standard video format by leveraging animated two-dimensional code. Thus, drone can broadcast video with sensor data through popular platform or services. By combining quasi-zenith satellites broadcast system, EverCopter, and SENSeTREAM, we provide a resilient smart city system.

Social Open BigData Generation&Distribution Platform (2015)

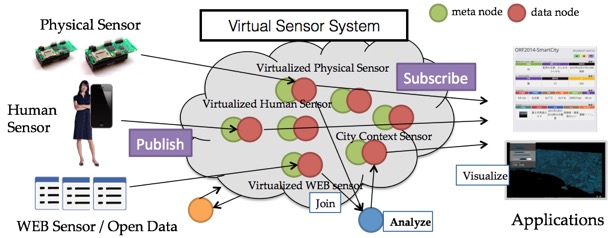

To realize smart city which provides efficient city life, business and better quality of life to various stakeholders and citizens, it is important how to collect and distribute massive data which can infer the city’s past, current and future.

We developed social open big data generation & distribution platform. You can download our software and tools, and start to publish your own sensors and subscribe massive data over 10GB/day !

Please visit our development site, and play with fun.

Published Works

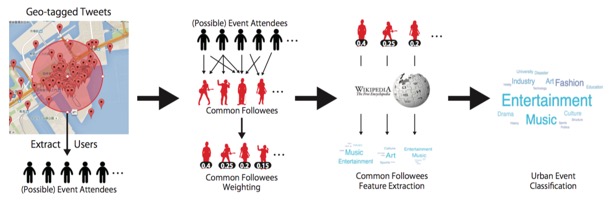

City Happenings into Wikipedia Category (Joint work with Shoya Sato)

Recently, many researchers have been focusing on the detection and classification of urban events by information analysis on social networks. Previous works mainly use text analysis of users' posts on social networks for detecting urban events. However, this approach has a limitation that the users' posts must mention the event for the analysis to be conducted. We propose a new method for classifying urban events by extracting user interest from the location-based social network information without text analysis. The proposed method includes analyzing common friends of users in the vicinity of the event venue and extracting common the friends' attributes by referring to related Wikipedia information. We designed and implemented the proposed method, and conducted an experiment for evaluating our method. Our experimental result shows that our method can classify events well in cases where participants have similar interests. PDF

ReFabricator: Integrating Everyday Objects for Digital Fabrication (Joint work with Suguru Yamada and Hironao Morishige)

Since current digital fabrication relies on 3D printer very much, there are several concerns such as printing cost (i.e., both financial and temporal cost) and sometimes too homogeneous impression with plastic filament. To address and solve the problem, we propose ReFabricator, a computational fabrication tool integrating everyday objects into digital fabrication. ReFabrication is a concept of fabrication, mixing the idea of Reuse and Digital Fabrication, which aims to fabricate new functional shape with ready made products, effectively utilizing its behavior. As a system prototype, we have implemented a design tool which enables users to gather up every day objects and reassemble them to another functional shape with taking advantages of both analog and digital fabrication. In particular, the system calculates the optimized positional relationship among objects, and generates joint objects to bond the objects together in order to achieve a certain shape.

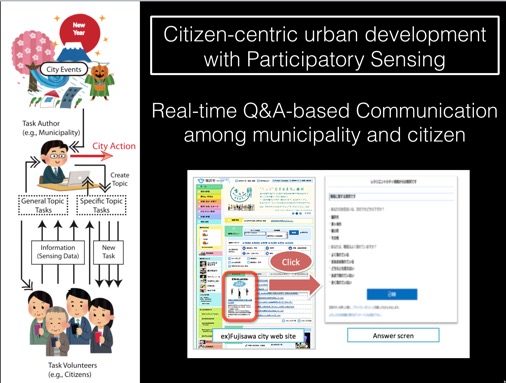

MinaQn: Web-based Participatory Sensing Platform for Citizen-centric Urban Development (Joint work with Mina Sakamura)

We presents MinaQn, a web-based participatory sensing platform to enhance bidirectional communication between municipality and citizens for citizen-centric urban development. To make cities more comfortable to live, it is necessary for municipality to collect and reflect citizens’ demands in their administrative works. MinaQn enables city officers to make various questionnaire to citizen easily, and citizen can answer the questionnaire with their location information through any devices by using WEB interface. The answers from citizen are collected as sensor data with unified format and protocol, so that the data can be shared and used for various applications. We designed and implemented MinaQn with web and XMPP technology, and carried out a two weeks experiment in corporation with 3 cities in Japan. Based on the experiment, we measured the effectiveness of MinaQn with qualitative and quantitative data. In addition, we also evaluated how citizens consider about providing their location information to the administrative works of the cities.

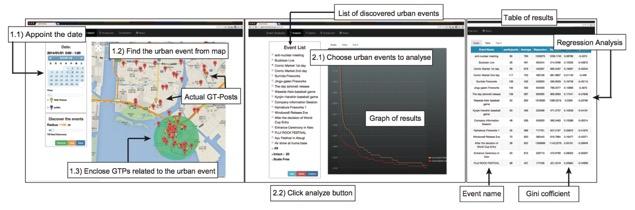

Classifying Urban Events’ Popularity by Analyzing Friends Information in Location-based Social Network (Joint work with Makoto Kawano)

Recent progress and spread of smartphones and social network services have enabled us to transmit text messages with GPS location data anywhere and anytime. Since these location-based SNS messages often refer to urban events, many researchers have tried to recognize urban events by analyzing of the messages. To construct the various applications based on the urban events information, we propose a new indicator of event, called Popularity which represents how popular the urban event is. Popularity is estimated by analyzing friends on social network of events’ participants. To evaluate our new indicator, we designed and implemented intuitive and interactive web-based tool for analyzing Popularity of events. Through comparative experiments, we confirmed that our proposed method could provide a certain amount of accuracy for estimating Popularity of events.

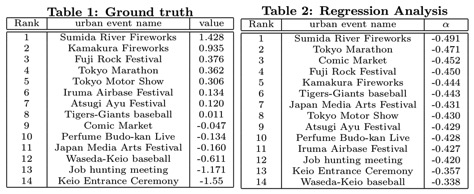

SENSeTREAM: Enhancing Online Live Experience with Sensor-Federated Video Stream Using Animated Two-Dimensional Code

We propose a novel technique that aggregates multiple sensor streams generated by totally different types of sensors into a visually enhanced video stream. This paper shows major features of SENSeTREAM and demonstrates enhancement of user experience in an online live music event. Since SENSeTREAM is a video stream with sensor values encoded in a two-dimensional graphical code, it can transmit multiple sensor data streams while maintaining their synchronization. A SENSeTREAM can be transmitted via existing live streaming services, and can be saved into existing video archive services. We have implemented a prototype SENSeTREAM generator and deployed it to an online live music event. Through the pilot study, we confirmed that SENSeTREAM works with popular streaming services, and provide a new media experience for live performances. We also indicate future direction for establishing visual stream aggregation and its applications. (Link to the paper)

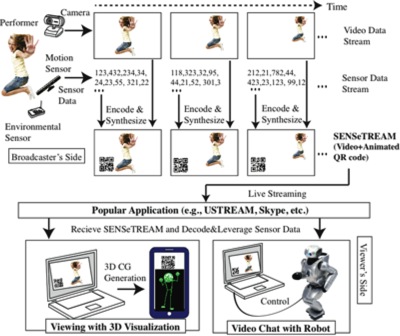

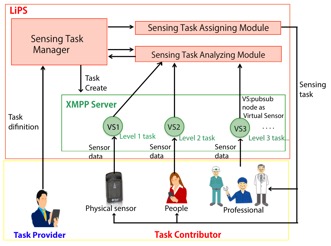

LiPS: Linked Participatory Sensing for Optimizing Social Resource Allocation (Joint work with Mina Sakamura)

We propose a concept of linked participatory sensing, called LiPS, that divide a complex sensing task into small tasks and link each other to optimize social resource allocation. Recently participatory sensing has been spreading, but its sensing tasks are still very simple and easy for participants to deal with (e.g. Please input the number of people standing in a queue. etc.) . To adapt to high-level tasks which require specific skills such as those in engineering, the medical profession or authority such as the organizer of the event, we need to optimize social resource allocation because the number of such professionals are limited. To achieve the complex sensing tasks efficiently, LiPS enables to divide a complex sensing task into small tasks and link each other by assigning proper sensors. LiPS can treat physical sensors and human as hybrid multi-level sensors, and task provider can arrange social resource allocation for the goal of each divided sensing task. In this paper, we describe the design and development of the LiPS system. We also implemented an in-lab experiment as the first prototype of hybrid sensing system and discussed the model of further system through users’ feedback.

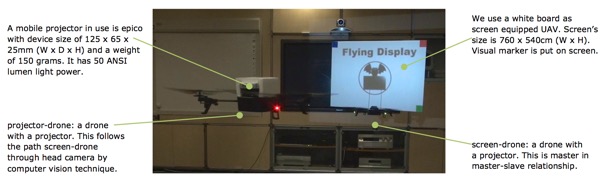

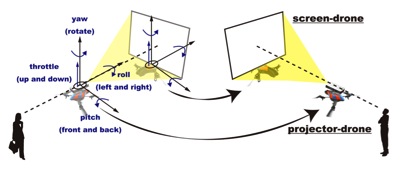

Flying Display: A Movable Display Pairing Projector and Screen in the Air (Joint work with Hiroki Nozaki)

We developed Flying Display, a novel movable public display system which can provide information to the people anywhere at anytime. This system consists of two UAVs (Unmanned Aerial Vehicles) with a projector and a screen. Flying Display archives moving freely and keeping state in 3-D space. It is equipped larger display, and then by moving closer to people, it gives information directly to them. To evaluate performance of Flying Display, we performed two experiments for adapting a flying control algorithm. We also showed the stability of Flying Display systems by trajectories of each UAVs. This paper highlights the performance of Flying Display and discusses the Flying Display’s potential for public displays in physical space.

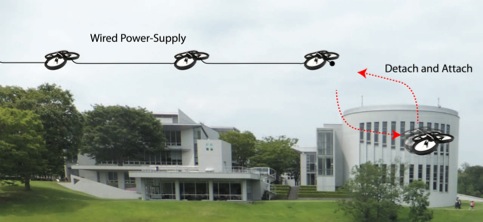

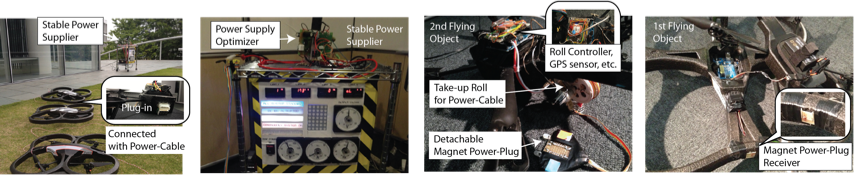

EverCopter: Continuous and Adaptive Over-the-Air Sensing with Detachable Wired Flying Objects (Joint work with Yutaro Kyono)

The paper proposes EverCopter, which provides continuous and adaptive over-the-air sensing with detachable wired flying objects.

While a major advantage of sensing systems with battery-operated MAVs is a wide sensing coverage, sensing time is limited due to its limited amount of energy. We propose dynamically rechargeable flying objects, called EverCopter. EverCopter achieves both long sensing time and wide sensing coverage by the following two characteristics. First, multiple EverCopters can be tied in a row by power supply cables. Since the root EverCopter in a row is connected to DC power supply on the ground, each EverCopter can fly without battery. This makes their sensing time forever, unless the power supply on the ground fails. Second, the leaf EverCopter can detach itself from the row in order to enjoy wider sensing coverage. An EverCopter, while it is detached, runs with its own battery-supplied energy. When the remaining energy becomes low, it flies back to the row to recharge the battery.

EverCopter: Continuous and Adaptive Over-the-Air Sensing with Detachable Wired Flying Objects (Ubicomp2013 Video) from HT Media and Systems Lab on Vimeo.

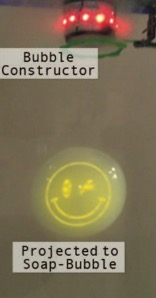

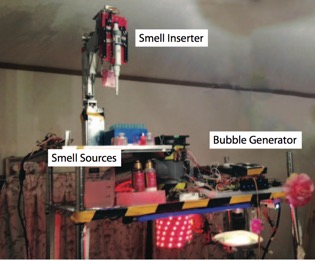

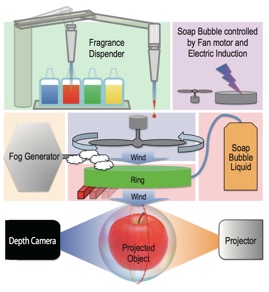

FRAGWRAP: Fragrance-Encapsulated and Projected Soap Bubble for Scent Mapping (Joint work with Yutaro Kyono)

This paper proposes FRAGWRAP which maps scent to real objects in real-time. To achieve this purpose, we leverage fragrance-encapsulated soap bubble with projection mapping technique. Since human olfaction is known as combined utilization of his/her eyes and nose, we encapsulate fragrance into bubble soap to stimulate the nose and also project 3D image of the fragrance to the bubble soap in real-time. In this video, we present our first prototype which automatically inserts fragrance into a soap bubble and also projects images to the moving bubble. All system is activated by speech recognition.

FRAGWRAP: Fragrance-Encapsulated and Projected Soap Bubble for Scent Mapping (Ubicomp2013 Video) from HT Media and Systems Lab on Vimeo.

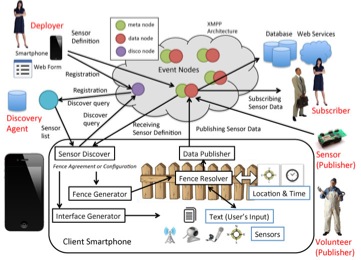

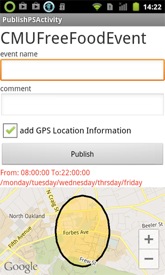

PS.Andrew: Integrated framework for sensor network and participatory sensing with concerning privacy (Joint work with WiSE Lab. at Carnegie Mellon University)

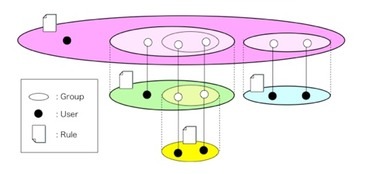

The Goal of the PS.Andrew (Participatory Sensor Andrew) project is to provide method for participatory sensing architecture which is integrated with open and wide sensor network (Sensor Andrew). It allows users to deploy and search participatory sensor easily and geographically with considering privacy, and subscribe and publish participatory sensor data with adaptive interface. Contributions of PS. Andrew are:

-Presenting XMPP-based participatory sensing architecture which can be integrated with physical sensor networks

-Providing geographical deployment and searching method for participatory sensor nodes

-Introducing the concept of Fence which defines spatial-temporal interest of participatory sensing and also preserves unexpected privacy leaks

(see more detail)

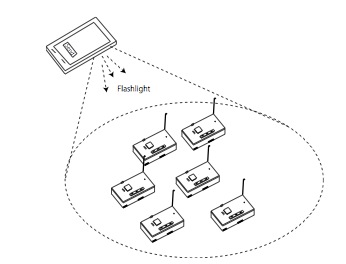

HUSTLE: Deploying A Secure Wireless Sensor Network by Light Communication with Smartphone (Joint work with Nguyen Doan Minh Giang)

Deploying Wireless Sensor Networks (WSN) securely still required users to have certain skills and efforts. In the near“ sensor everywhere ”future, much simpler method for deploying WSN is necessary for end-users. We pro- pose a method called HUSTLE, which leverages a light-based communication among sensor nodes and Smartphone for adding multiple sensor nodes at the same time. To deploy wireless sensor network, users only need to turn on sensor nodes and shine Smartphone’s flashlight on them. WSN ID and one-time security key can be transmitted to all of sensor nodes, and it can be added to with secure.

Vinteraction: Vibration-based Interaction for Smart Devices

According to the spread of smart devices such as smartphone and tablet computers, opportunities to communicate between then will be more increasing. Though we usually use WiFi and Bluetooth in such a case, it is still burden task for end-users. This research proposes a new interaction called Vinteracion, which leverages combination of vibrator and accelerometer to send information from smart device to the other smart device. Vinteraction enables users to use touch interaction like Near Field Communication, which provides easy and intuitive way. We present an algorithm and implementation of vibration-based communication, and also evaluate our system with actual products. We also present several applications which leverages Vinteraction effectively. see more detail

Detecting and Visualizing of Place-triggered Geotagged Tweets (joint work with Shinya Hiruta)

This research proposes and evaluates a method to detect and classify tweets that are triggered by places where users locate. Recently, many related works address to detect real world events from social media such as Twitter. However, geotagged tweets often contain noise, which means tweets which are not content-wise related to users’ location. This noise is problem for detecting real world events. To address and solve the problem, we define the Place-Triggered Geotagged Tweet, meaning tweets which have both geotag and content-based relation to users’ location. We designed and implemented a keyword-based matching technique to detect and classify place-triggered geotagged tweets. We evaluated the performance of our method against a ground truth provided by 18 human classifiers, and achieved 82% accuracy. Additionally, we also present two example applications for visualizing place-triggered geotagged tweets. see more detail

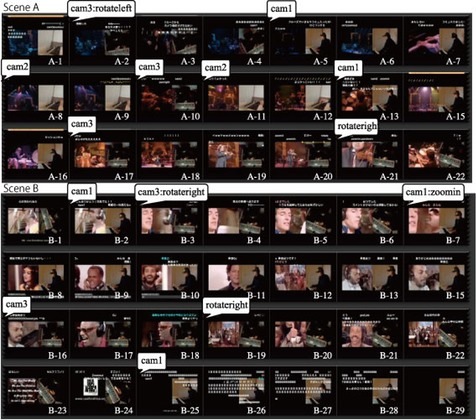

Co-Live: Lister-Cooperative Live Production System

Recent progress of information technology enables people to broadcast live on the Internet easily. Though the advantage of the Internet live is communication between performer and listeners, the current way of communication is only by writing comment through WEB such as Twitter or Facebook. Though one of the main contents in broadcast live is a musical instrument performance, it is difficult for musician in playing instrument to communicate listeners by only writing comments. We propose a new communication way between broadcasters who play musical instruments and listeners by enabling listeners to control broadcaster's camera or illumination remotely. From the results of 4 weeks experiments, we confirmed emergence of nonverbal communication between performer and listeners, or between listeners, which contributes to amplify togetherness of participants. In addition, we also recognize that our system can enhance dramatic impact by making listeners to control various camera works such as zoom-in or pan.

see more detail

Full video for 90 min live

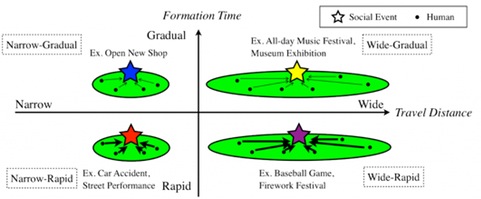

Detecting and Classifying Social Events Based on Formation Process of Human Crowds

We proposes a method to detect and distinguish the types of social events based on formation process of crowds. We often form crowds according to social events such as ``car accidents, baseball games or music concerts''. Since such kinds of crowds sometimes harm our social activities (e.g., bad traffic), detection or prediction of crowds and social events are important. This paper focuses on detecting and predicting crowds and social events using location information, such as GPS and WiFi. The aim of this paper is two folds; (1) discussing and classifying social events based on crowds' characterization and (2) providing design and implementation of discrimination algorithm to detect social events.

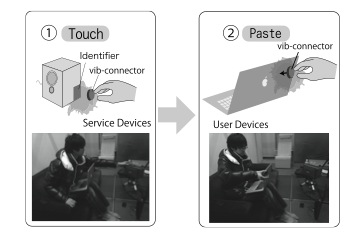

Vib-connect

We propose an intuitive device selecting interface called “Vib-connect” for device collaboration. Recent progress in Information Technology allowed various devices to join wireless network, and as a result, various ways of device collaboration and services became possible. However, interfaces for selecting device are still complicated for end-users and far from being intuitive. To solve this problem, we propose “vib-connect”, an interface that enables users to select device intuitively by pasting a vib-connector, a small vibration-based device. Vib-connect solves these problem by implementing these device information as a unique vibration pattern. By keeping smart-phones or any kind of vibration generating device within touch, end-users can easily select devices to collaborate. We have implemented a prototype, and got a high accuracy in vibration pattern recognition, and a high satisfaction by non-expert users in usability evaluation. With Vib-connect, even users without any kind of technical expertise can select devices to collaborate easily.

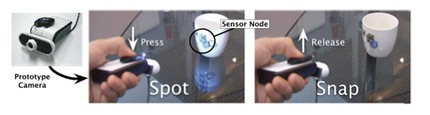

Spot&Snap

We introduce the concept of DIY(do-it-yourself) Smart Objects Services that enables non-expert users to apply smart object services for everyday life. When using smart object services, a semantic connection between sensor node and domestic objects must be made before services function properly. At home, however, professional assistance with such installation may be either unavailable or too costly. To solve this problem, we present Spot & Snap, an interaction that eases of such association with use of a USB camera with an LED spotlight. With Spot & Snap, non-expert users can register their belongings to preferred services without experts. Consequently, it provides application framework for programmers to create various smart object services.

u-Texture

This research proposes a novel way to allow non-expert users to create smart surroundings. Non-smart everyday objects such as furniture and appliances found in homes and offices can be converted to smart ones by attaching computers, sensors, and devices. In this way, non-smart components that form non-smart objects are made smart in advance. For our first prototype, we have developed u-Texture, a self-organizable universal panel that works as a building block. The u-Texture can change its own behavior autonomously through recognition of its location, its inclination, and surrounding environment by assembling these factors physically.

Second-Sighted Camera

There has been a great effort to create context-aware technology to support user's life. Context-aware technology is useful because it resolves user's demand in prior. However, if it fails to recognize context correctly and controls appliances incorrectly, users may feel uncomfortable about the environment. Context recognition error is currently unavoidable, therefore, notifying the user of what kind of result each context has, is considered to be important in order to ease user's discomfort. In the similar way, it is important to show the smartness of ubiquitous computing environment to people who don't know about it. To effectively provide this information to the users, we propose an interface called Second-sighted Glasses, inspired by the movie titled "Next". The glasses uses augmented reality technique to show users the results of each action they may take.

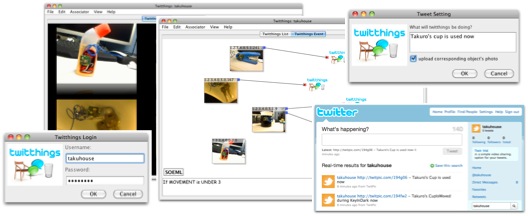

Twitthings

Recent progress of ubiquitous sensor networks can provide many raw logs. However, many logs will be write-only logs; they will be written but never accessed. It is also necessary to process raw logs into meaningful data events and aggregating the events into a kind of chronicle. In this research, we propose Twitthings that provides sustainable development of context-aware applications. Based on our smart objects experiences, Twitthings can tweet their event/chronicle through Twitter platform. Additionally, users who share the Twitthings can reuse the Tweets to define further events/chronicles.

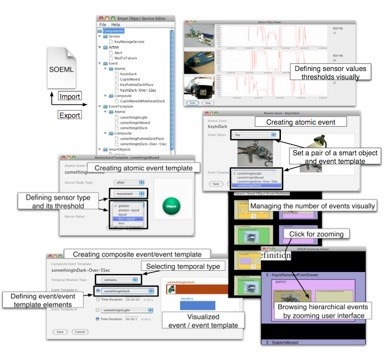

SOEML(Smart Object Event Modeling Language)

By attaching sensor nodes to everyday objects, users can augment the objects digitally and apply the objects into various services. When creating such smart object services, users should define events, such as beverage of a cup turns cold or someone sits down on a chair, using physical values from sensors. The most common event definition for end-users is simply describing threshold of sensor values and boolean operation. When they want to define more complex events, such as multiple people sit down on chairs or a user starts to study using a pen and a notebook, they need to use a programming language. To define such complex event easily without complex programming language, we present a new event description language called SOEML based on temporal relation among simple events. We also provide users a visual interface to support users defining or reusing events easily.

Adaptable Exclusion Control for Multi-user Context-aware Service Environment

Recently,with the spread of ubiquitous computing environment, user can use appliances through various methods.With sensors embedded into space,user can use context-aware services. Therefore the opportunity of to use appliance will increase.In this environment, situations in which multiple users access the same appliance will occur often.So,mutual exclusion of requests will be needed for appliances which cannot fill demands of two or more users simultaneously,such as television. Currently,this conflict of demand is solved through user’s communication. However, there are so many appliances in ubiquitous computing environment,that it incurs high cost for users if they need to communicate whenever the demands collide.So the policy to automatically determine which demand prevails needs to be deployed by appliance’s administrator in ubiquitous computing environment. This research aims to realize the environment where an administrator can perform flexible mutual exclusion,with policy which reflects the relation of two or more users who use appliances. To archive this purpose,this paper proposes multi-level priority determination policy - the exclusion control technique which reflects a user’s relation in an actual living environment.